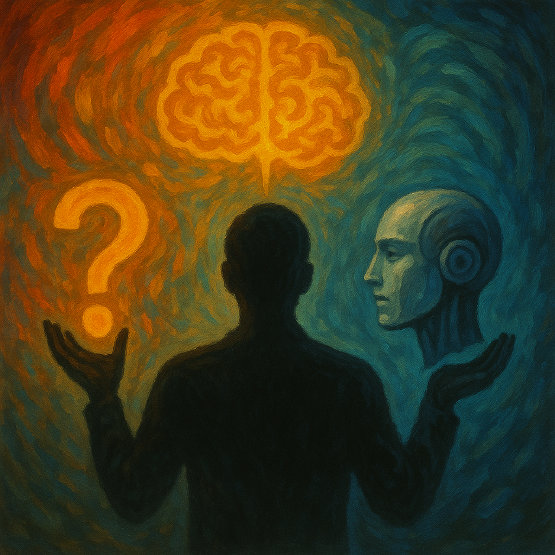

Ethics and Responsibilities of Artificial Intelligence: How Will Humans Define the Boundaries of Technology?

The Evolution of AI

We are now living in an era that has moved beyond the time when technology simply waited for commands at human fingertips, coexisting with a new entity that sits alongside humans at the decision-making table. That entity is artificial intelligence. AI is no longer just a simple calculator or program. It has evolved into a 'quasi-autonomous being' that can recognize complex situations, learn vast amounts of data independently, and exhibit judgment capabilities similar to those of humans. From legal advice to disease diagnosis, and from financial decision-making to content creation—AI is establishing itself as a 'de facto decision-maker' that has a substantial and decisive impact on real life. Technology has transcended its mere status as a tool, becoming an active element that interacts with humans and contributes to the formation of a new technological ecosystem.

Raising the Issue of Responsibility

These changes provide us with surprises and conveniences that we have never experienced before, while quietly posing a fundamental question: 'Then, whose responsibility is it?' Many judgments made by AI today still occur under human direction or supervision. However, its influence has already reached a level that goes beyond direct human control. For instance, autonomous vehicles can make life-and-death decisions in a fraction of a second, and AI-generated medical diagnoses significantly impact the direction of actual treatment. In this way, AI's judgments function not just as simple 'suggestions' but as the very act of 'decisions' in reality. So, to what extent can we be held accountable for these outcomes, and where should we redefine the boundaries of responsibility?

Limits of Traditional Concepts

Traditional ethics and legal systems have regarded humans as the only subjects of moral judgment and responsibility. The prevailing view has been that machines cannot be moral subjects because they lack emotions and consciousness. However, this premise is rapidly becoming too narrow a framework to adequately explain the swiftly changing reality. Is it really reasonable to continue to consider AI, which makes judgments, influences social and economic outcomes, and sometimes avoids risks more adeptly than humans, as merely a 'simple tool'?

Moral Responsibility of AI

Of course, to recognize artificial intelligence as an autonomous entity with moral responsibility, not only technological progress but also philosophical discussions must advance together. However, what is truly important is the fact that reality has already substantively accepted 'the subjectivity of AI' at that threshold. AI decisions have become commonplace, moving beyond mere mechanical responses and replacing human judgment in various scenarios. Ethical dilemmas like the 'trolley problem' have transitioned from being mere examples in philosophy textbooks to real issues operating in real-time within the algorithms of autonomous systems. While the design is human-made, the execution occurs independent of human control.

The Necessity of a New Responsibility Model

Therefore, the issue of 'responsibility' cannot be resolved solely through technological advancements or the precision of theories. We must now design a new model of responsibility. This involves shifting the relationship between humans and artificial intelligence from a unilateral structure of 'command-compliance' to a 'shared responsibility structure' based on mutual judgment and accountability. In a complex environment like modern society, where technology permeates all areas, a straightforward attribution of responsibility cannot address the problems of reality.

The attitude of humans is important.

At the heart of this change, the most important factor is the attitude of humans. We may be tempted to admire the advancement of AI while gradually shifting responsibility to technology, but there is a fact we must not forget. Ultimately, the designers of AI and the entities that deploy and operate it in society are human. While we can share systems and responsibilities, the origin of that responsibility always lies with humans. As technology becomes more advanced, humans must be more ethical and have a deeper sense of responsibility.

Humanity at a Crossroads

We stand at a crossroads. One path stifles progress by overly restraining technology, while the other path completely entrusts judgment to technology, with humans stepping back. However, the direction we need to choose lies between these two extremes. It is the 'third way' where we share judgment with technology, but the center of responsibility must remain firmly in human hands. Technology can make judgments. But the ability to ascribe meaning, hold moral responsibility, and draw lessons from those judgments is something only humans possess. It is within this reflective capability of humans that we can find hope for a future in harmony with technology.

Post a Comment